AI experts hail multimodal AI as the ‘next big thing’ in the world of artificial intelligence. This innovative technology is transforming how machines interpret and interact with the world around them. But what is multimodal AI? How does it work, and how is it different from other AI models?

What is multimodal AI?

Multimodal artificial intelligence refers to AI systems that can simultaneously process and understand multiple types of data inputs. These inputs or ‘modalities’ can be text, images, video, voice, and more. Traditional AI models focus on a single type of data input. However, multimodal AI can combine and interpret information from multiple sources to deliver more sophisticated and comprehensive results.

A customer support chatbot can help users with text queries only, but it might not understand a customer’s mood and emotions. However, a multimodal AI system can. It can understand the tone of voice, nature of text, and facial expressions (if there is a video). This is how multimodal AI provides a comprehensive output that resonates with customers’ emotions.

As per MarketsandMarkets, the market of multimodal AI will reach up to $4.5 billion by the end of 2028. This significant growth rate is due to different factors like a wide-scale adoption of multimodal AI models that provide businesses with multiple benefits.

Contact Us Today

Get in touch to learn more about our services and how we can help you.

Benefits of multimodal AI systems

Multimodal AI systems offer a variety of benefits. Let’s look at a few of them!

Enhanced contextual understanding

A multimodal AI system can understand multiple data inputs such as text, images, audio, video, and more. It provides businesses with an actual understanding of the context. The integration enables artificial intelligence to better understand contextual information.

For example, a multimodal AI can better interpret a customer’s complaint in customer service. It can understand the context of their email, relevant images, and videos, as well as the customer’s tone of voice in a call.

Improved accuracy and reliability

Multimodal AI system has more accuracy and readability when it comes to validating information. Multimodal AI allows systems to cross-validate the information taken from multiple modalities. This is how it can use different data types to confirm and make well-informed conclusions.

For example, a multimodal AI uses data from different data sources to provide accurate diagnosis. The data sources include medical imaging, lab tests, patient records, and more.

Improved decision-making

Multimodal AI systems integrate a diverse number of data sources. This helps them make educated decisions. It has the built-in ability to understand different types of data, which gives it a wider perspective to get insights and make relevant and informed decisions.

For example, a multimodal AI integrates different types of data sources to make strategic business insights and recommendations. The data sources include social media feedback, sales analytics, market trends, etc.

How does multimodal AI work?

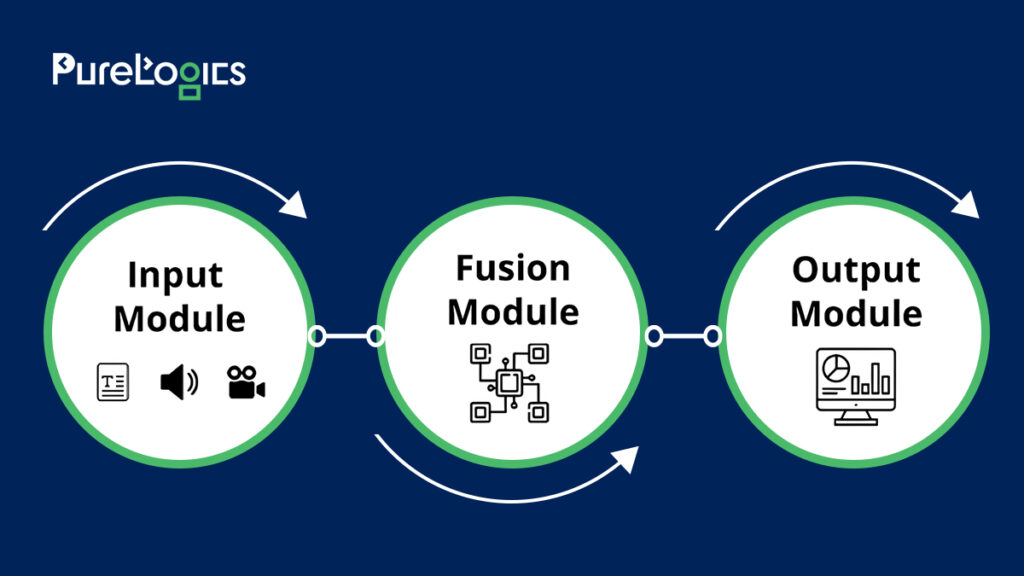

Multimodal AI systems have been designed to identify patterns among diverse types of data inputs. These data inputs consist of three elements:

The input module includes a combination of neural networks that take in and process two or more data types. As a separate neural network handles each type of data, each multimodal AI input module includes multiple unimodal neural networks.

The second element is the fusion module. It integrates and processes essential data from every data type and takes advantage of their strengths.

The output module is responsible for creating outputs that help the system understand the overall context of the data. This is the module that generates the output from a multimodal AI system.

Most popular multimodal AI systems

Here are the five most popular and globally used multimodal AI systems:

- GPT-4V

- Inworld AI

- Runway Gen-2

- Google Gemini

- Meta ImageBind

How are multimodal AI systems being used in different industries?

The versatility of multimodal AI has proved a game-changer for multiple industries. See how multimodal AI is being used today!

Healthcare: A multimodal AI system helps doctors personalize treatment and make patient outcomes better by understanding medical images, genetic info, and patient records.

Retail: When customers buy online, a multimodal AI system checks what they have looked at. It analyzes your navigation journey and suggests things according to your buying interests and behavior. This is how multimodal AI makes your buying experience amazing.

Agriculture: Farmers are also employing multimodal AI to check their crops with soil info, weather data, and satellite images. This assists them in deciding on watering and use of fertilizers.

Manufacturing: Multimodal AI also helps factories to interpret pictures and sounds to find out issues in the products and make the manufacturing process smoother.

Entertainment: In entertainment, multimodal AI systems watch seasons or movies to understand which characters their users like and dislike. This helps them make the best content for their viewers.

Challenges of building a multimodal AI system

A multimodal AI system is more difficult to build than an unimodal AI system. The following are several reasons:

Data integration: It is difficult to combine and synchronize multiple data types because of the different formats of the data from various sources.

Feature representation: Every modality has unique features and representation methods, which is a big challenge in building a multimodal AI system.

Dimensionality & scalability: Multimodal data is usually high-dimensional, and no mechanisms exist for dimensionality reduction. This is because every modality has its own unique set of characteristics.

Availability of labeled data: It is difficult to collect and annotate data sets with different modalities. Moreover, it is expensive to maintain extensive multimodal training datasets.

Model architecture & fusion techniques: It is also challenging to design model architectures and fusion techniques to integrate information from diverse modalities.

Contact Us Today

Get in touch to learn more about our services and how we can help you.

Are multimodal AI systems the future?

Multimodal AI is reinventing the AI game as it is combining data from diverse sources for a more connected world. OpenAI joined the game of Multimodal AI on September 25 and added speech recognition and image analysis for mobile applications. Also, Google’s chatbot (Bard) has been rocking the multimodality game since July 2023. Today, ChatGPT not only understands text, but it also reads, chats, visualizes, and even recognizes images.

Let PureLogics’ Generative AI Development Services Empower Your Future!

Businesses, an extraordinary journey awaits you! Dive into the world of multimodal AI to ride the wave of innovation. We offer a free 30-minute consultation call. Talk to our experts and discover how our proven generative AI development services can unlock the potential of your business. Give us a call now!

<p>The post Multimodal AI: What It Is and How It Works first appeared on PureLogics.</p>